Dancing with AI

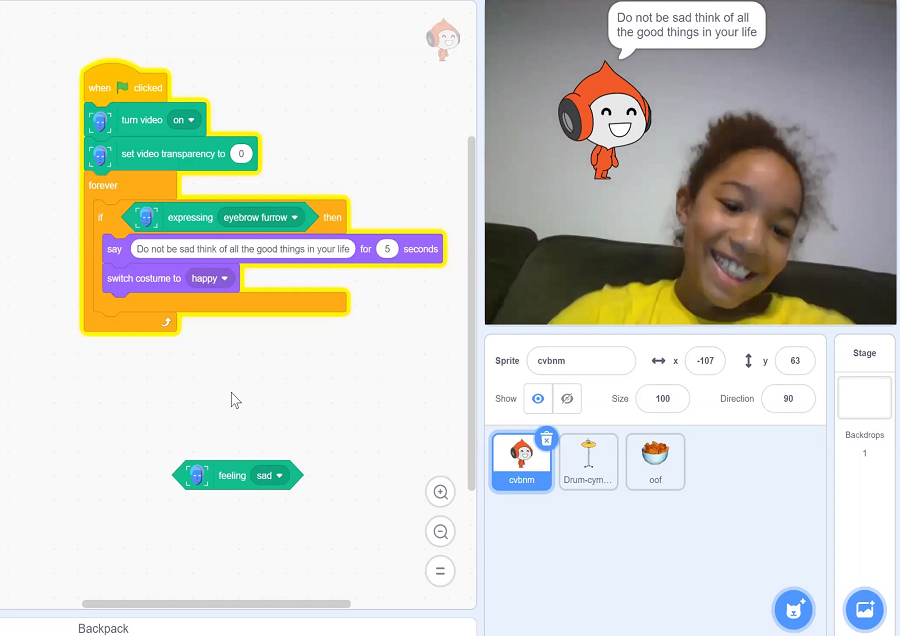

Physical movement is one of the most engaging ways to interact with AI systems, but it’s rare today to see motion integrated with K-12 AI curricula. Beyond that, many middle schoolers have passionate interests in dance, art, physical movement in sports, and video games that involve physical motion (Beat Saber, Just Dance) which aren’t easy to build on in the typical creative learning environments found in classrooms. Dancing with AI is a week-long workshop curriculum in which students conceptualize, design, build, and reflect on interactive physical-movement-based multimedia experiences. Students will learn to build interactive AI projects using two new Scratch Extension tools developed for this curriculum: (1) hand/body/face position-tracking and expression-detecting blocks based on the machine learning models PoseNet & MediaPipe from Google and Affectiva’s face model, and (2) Teachable Machine blocks that allow students to train their own image- and pose-recognition models on Google’s Teachable Machine and use them as part of their projects.

Team

Brian Jordan

Personal Robots Group, MIT Media LabJenna Hong

Personal Robots Group, MIT Media LabNisha Devasia

Personal Robots Group, MIT Media Lab

Randi Williams

Personal Robots Group, MIT Media LabCynthia Breazeal

Personal Robots Group, MIT Media Lab